Can AI Use Be Detected in Academic Writing? Here's What You Need to Know

The Rise of AI Detection in Academia

With the increasing use of generative AI in writing, educators and institutions are understandably concerned about how to fairly review work by students and authors. In response to the challenge of identifying AI writing, AI detection tools have popped up on the market, touting their ability to flag content generated from platforms such as ChatGPT and Gemini.

Though it might seem simple enough to run your essay through an AI detector like you would with a plagiarism checker, AI detection tools are flawed in their consistency, reliability, and transparency.

Low Accuracy and High Bias

Many AI detectors mark false positives and false negatives, meaning that human-written text is wrongly identified as AI or that AI-generated text is not flagged. Studies on the efficacy of AI detection tools have identified wavering classifications of human-written content or weak performance when processing manually edited or translated AI content.

At the beginning of 2023, OpenAI launched an AI classifier for identifying AI-written text but shut it down six months later due to its "low rate of accuracy." Similarly, in August 2023, Vanderbilt decided to disable Turnitin's AI-detection function after determining that it had a 1% false positive rate and was more likely to flag text written by nonnative English writers as AI generated.

Lack of Transparency

It's unclear how AI detectors are trained or what they measure to determine whether a sample of text was AI generated. A bit like the wizard of Oz, they're hidden behind a curtain … and may be a little less mighty than expected or desired.

While burstiness (the predictability of a text based on the variability of sentence length and structure) and perplexity (the predictability of the next word in the sentence) are commonly mentioned parameters of AI detection, these AI detections systems are, ultimately, black boxes: the platforms do not disclose the content their models are trained on nor explain what their models are looking for when detecting AI use.

Breakability and Inability to Keep Up with New AI Models

As AI detectors are trained on older models or datasets, they are simply unable to keep up with newer and more complex versions of generative AI platforms. Not only is it difficult for them to distinguish between AI- and human-written content in general, AI-generated text can be manually edited to sound more human or even processed through AI detection bypassing tools.

Of course, these tools have their own challenges …

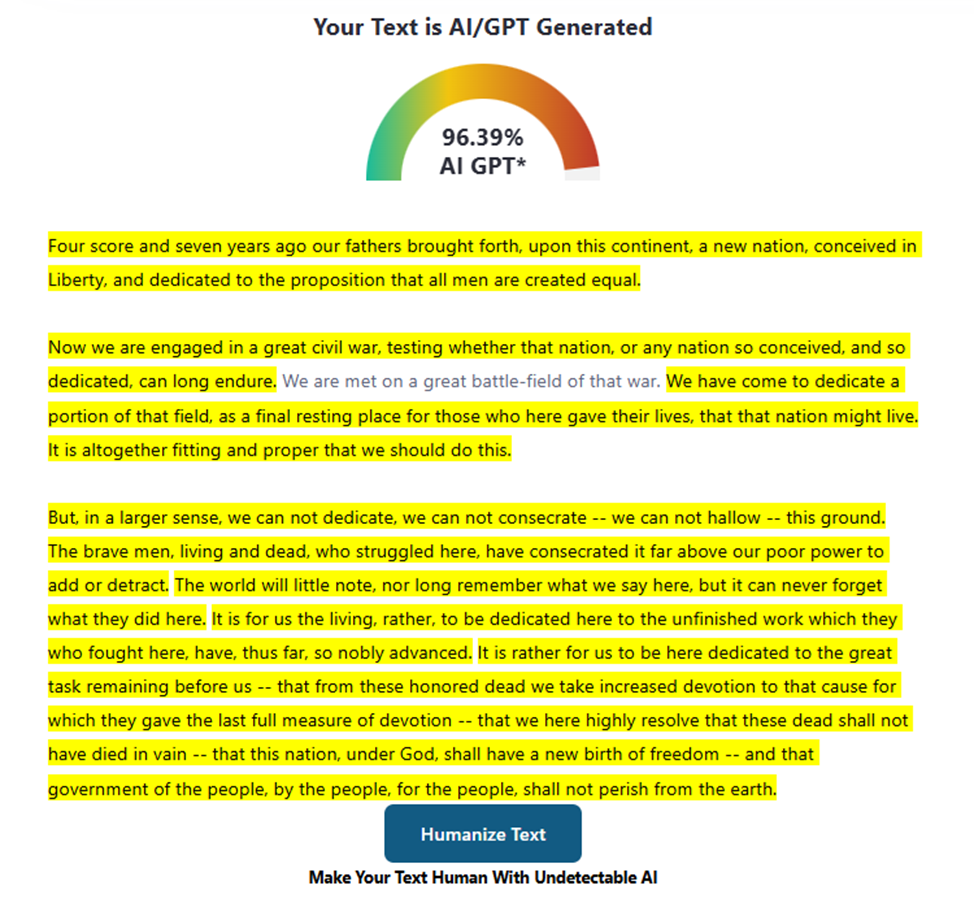

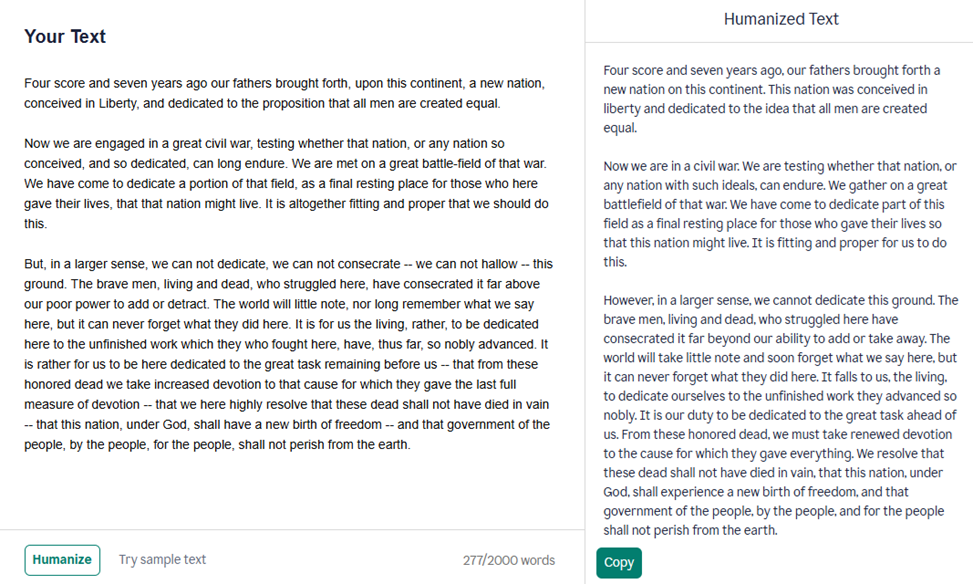

Test Case: The Gettysburg Address

Let's look at a historical piece of human writing, the Gettysburg Address as delivered by Abraham Lincoln in 1863, and see what happens when we run it through an AI detector and a humanizing AI tool.

Figure 1. The Gettysburg Address—AI generated?

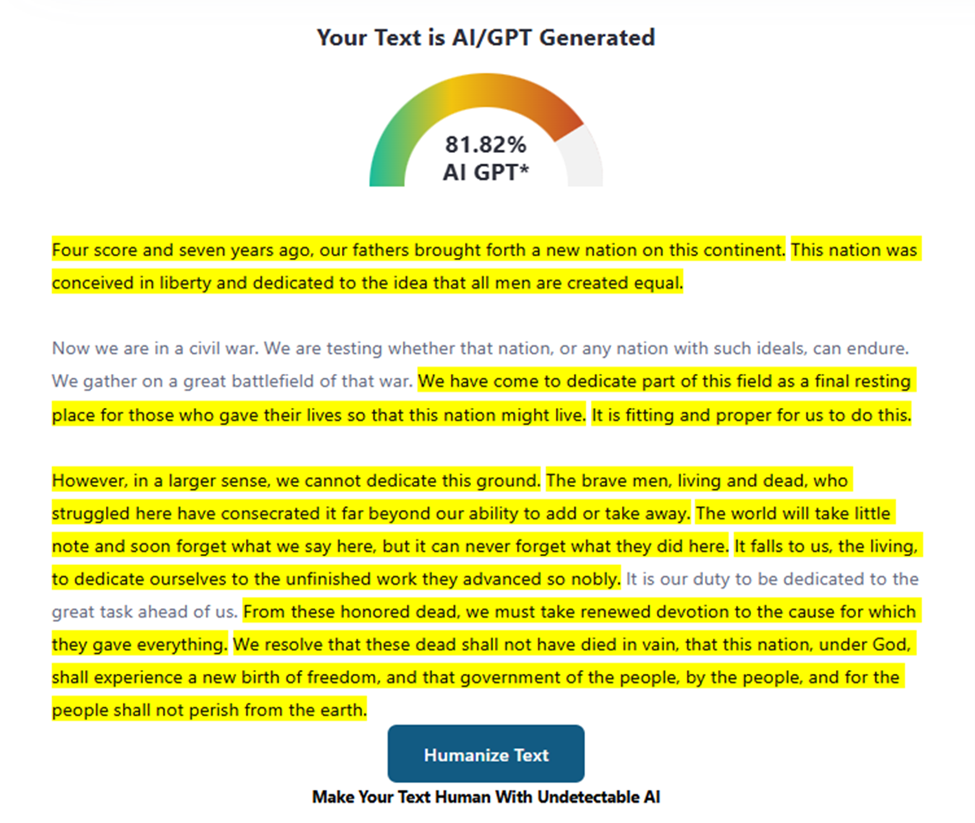

So, we have one of the great speeches from human history, written more than a century and a half before our current age of AI tools, and the AI checker found that it was 96.39% AI written. Abraham Lincoln might have had great foresight, but he likely couldn't reach into the future. When we run this text through a "humanizing" tool, not only is the final text far more boring (stripped of its originality and striking cadence), but it's also still found to be a remarkable 81.82% generated by AI. So, a piece written by a human is tested and found to be created by AI, and then when AI tries to "humanize" this piece of human writing to be less like AI writing, it is still found to be AI writing. President Lincoln might be happy he's not around to see this …

Figure 2. "Humanizing" the Gettysburg Address

Figure 3. Detecting AI in the humanized Gettysburg Address

What Can You Do?

Whether you're writing an essay for a university course or drafting an article to submit to a journal, you should familiarize yourself with the AI policy of your professor, institution, or target journal. Ideally, they should provide clear guidelines for the appropriate use of AI. In line with these policies, it's important to be transparent about AI use and properly acknowledge or cite it, but the safest option is to utilize an experienced human editor to support you in your work and guide you around potential pitfalls.

Records of your written work can also be very helpful if you receive inquiries about the potential use of generative AI in your work or its score on an AI detector. Materials such as brainstorming notes, essay outlines, and early drafts can show the development of your work from beginning to end.

Though you can't control whether your writing will be evaluated using an AI detector, you can make sure that you are well-informed on AI and diligent in handling and developing your own work.